This post is Part 4 of a 4-part series. Be sure to check out the other posts in the series for a deeper dive into our AI-powered business plan generator.

Part 1: How We Built an AI-Powered Business Plan Generator Using LangGraph & LangChain

Part 2: How We Optimized AI Business Plan Generation: Speed vs. Quality Trade-offs

Part 3: How We Created 273 Unit Tests in 3 Days Without Writing a Single Line of Code

Part 4: AI Evaluation Framework — How We Built a System to Score and Improve AI-Generated Business Plans

Introduction: The Challenge of Evaluating AI Business Plans

Evaluating AI-generated content objectively is complex. Unlike structured outputs with clear right or wrong answers, business plans involve strategic thinking, feasibility assessments, and coherence, making evaluation highly subjective.

This raised key challenges:

- How do we quantify “good” vs. “bad” business plan content?

- How can we ensure that AI self-improves over time?

- How do we make the evaluation consistent and unbiased?

To solve this, we developed a structured scoring framework that allows us to evaluate, iterate, and enhance AI-generated business plans. Our approach combined multiple evaluation frameworks, each tailored to different sections of the plan, ensuring both accuracy and strategic depth.

It is important to note that this detailed evaluation system was part of our original implementation, where each section underwent rigorous assessment and iteration. However, due to performance constraints, we simplified the evaluation process in the MVP to prioritize generation speed. This trade-off helped us deploy faster while keeping the evaluation framework as part of ongoing research for future improvements.

Recent research in LLM-based evaluation has confirmed the effectiveness of structured AI evaluation. Studies such as Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models (2024) and OpenAI’s Evals framework have demonstrated that LLMs can be reliable evaluators when guided by structured scoring criteria.

Designing the Evaluation Framework

We took inspiration from teacher grading systems and applied it to AI-generated business plans. This led to the creation of multiple evaluation frameworks, each tailored to different types of sections.

Evaluation Frameworks by Section Type

Instead of using a one-size-fits-all scoring method, we developed customized scoring criteria depending on the type of content being evaluated:

Strategic Planning & Business Model

- Assessed for clarity, SMART goal alignment, and feasibility.

- Required explicit action plans and structured goal setting.

Market Research & Competitive Analysis

- Focused on depth of research, differentiation, and real-world data validation.

- AI responses were scored on market realism and competitive positioning.

Financial Planning & Projections

- Evaluated financial assumptions, revenue modeling, and expense breakdowns.

- AI outputs had to be quantified, internally consistent, and reasonable.

Operational & Execution Strategy

- Scored on feasibility, risk mitigation, and execution roadmap.

- Required clear team structure and resource allocation.

Marketing & Sales Strategy

- Assessed on target audience alignment, conversion potential, and branding consistency.

- AI-generated marketing plans had to be specific and data-driven.

Each framework assigned weights to different scoring dimensions, ensuring that critical areas (e.g., financial viability) influenced the overall score more than less critical ones. This aligns with recent findings from Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models, which emphasized the need for fine-grained evaluation benchmarks using LLMs.

Evaluation Scoring Mechanism

Each section was scored from 1 to 5, following a rubric:

AI-Driven Iterative Improvement

To enable AI to self-improve, we designed a multi-step feedback loop:

Step 1: Draft Generation

- The AI generates an initial draft based on user input.

- Sections are structured according to predefined templates.

Step 2: AI Self-Evaluation

- The AI reviews its own output against the section-specific evaluation frameworks.

- Identifies areas with missing data, vague explanations, or weak strategic alignment.

Step 3: AI Self-Improvement

- AI regenerates weak sections, ensuring better alignment with evaluation criteria.

- If financials or market analysis are lacking, AI adjusts assumptions and reasoning.

Step 4: Final Evaluation

- The AI conducts a second scoring pass to validate its own improvements.

- The final version is compared against past iterations to track progress.

This iterative generate → evaluate → improve process aligns with state-of-the-art research showing that LLM-based evaluations improve over multiple passes.

Statistical Validation: Did It Actually Work?

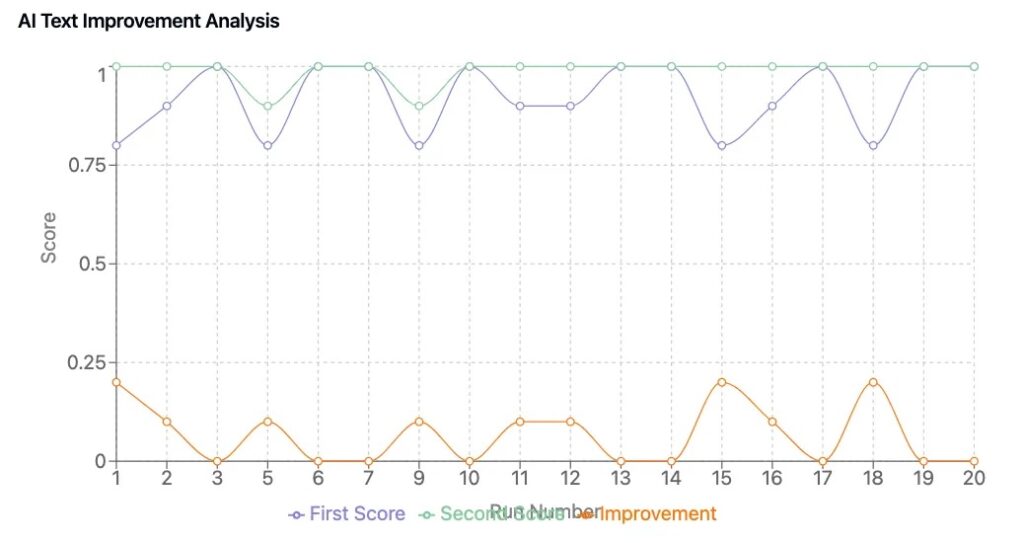

To confirm that our framework led to tangible improvements, we ran a 50-plan test cycle, comparing AI-generated business plans with and without self-improvement loops.

Key Findings

- Scoring Consistency: AI-generated content scored consistently, reducing random fluctuations in plan quality.

- Measurable Improvement: Plans that underwent AI-driven refinement improved by 0.6 to 1.2 points on average.

- Better Business Insights: Refined versions had stronger strategic alignment, clearer financial projections, and more persuasive messaging.

These findings reflect trends observed in LLM evaluation research, where structured grading frameworks and iterative scoring significantly improve AI-generated content.

Key Takeaways

1. AI Can Self-Improve When Given Structured Evaluation Criteria

- A well-defined scoring framework allows AI to recognize and correct its own weaknesses.

2. Quantitative Scoring Ensures Objective Content Validation

- Subjective assessments were minimized through standardized grading rubrics.

3. The Evaluation Framework Was Designed for Advanced AI Iterations, but the MVP Focused on Speed

- The original implementation included multiple evaluation cycles per section.

- Due to performance constraints, we simplified this in the MVP but retained it for future research and improvement.

4. LLM Evaluators Are an Industry-Wide Trend

- New AI evaluation models (e.g., Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models, LLMs-as-Judges) are improving consistency and reducing bias. (arxiv.org)

- The AI evaluation field is evolving toward multi-layered scoring frameworks, validating the approach we pioneered.

Try Our AI-Powered Business Suite

We built and optimized our AI-driven business plan generator at DreamHost, ensuring enterprise-level performance and scalability.

DreamHost customers can click here to get started and explore our AI-powered business plan generator and other AI tools.

This post is Part 4 of a 4-part series. Be sure to check out the other posts in the series for a deeper dive into our AI-powered business plan generator.

Part 1: How We Built an AI-Powered Business Plan Generator Using LangGraph & LangChain

Part 2: How We Optimized AI Business Plan Generation: Speed vs. Quality Trade-offs

Part 3: How We Created 273 Unit Tests in 3 Days Without Writing a Single Line of Code

Part 4: AI Evaluation Framework — How We Built a System to Score and Improve AI-Generated Business Plans